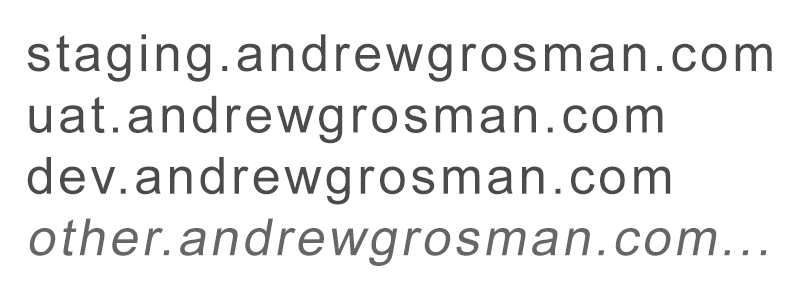

Over the last 3 years I have done no less than 40 to 50 Technical SEO Audits and out of those at least 10 of them had their staging or UAT (User Acceptance Testing) version of their site submitted to Google. This is important because it helps in preventing content that is still in its form or is not complete to be displayed in the search engine results. The worst form of all this is when it has the same content as the live site and there is an issue of duplicate content. Here are some tips that you can use when preventing Google from indexing your staging website:

- Use a robots.txt file: A robots.txt file is a text file that contains instructions that describe which pages or portions of a website should or should not be crawled by search engine bots. To prevent Google from crawling your site, you should upload the robots.txt file to the root directory of your staging website and include the following code in it:

User-agent: * Disallow: /

This code is a command to all search engine bots to not crawl any page or section of your website. - Password protect your website: So as not to let Google crawl your staging website, you can password protect it. You can do this through the installation of a login page or by using a plugin that provides access restrictions to the website. This will not allow any crawl from search engine bots and your staging website will not be indexed.

- Use a “noindex” meta tag: You can also put a “noindex” meta tag in the header of your website’s HTML code. This is a command to search engines not to index the page. Here’s an example of what the code should look like:

<meta name=”robots” content=”noindex”>

In this case, any of the above methods will help you hide the content of your staging website from Google and other search engines until you are ready to unveil it on your live site.

Leave a Reply